声明:本文内容来自英文内容全部来自wikipedia,中文翻译is inprogress......

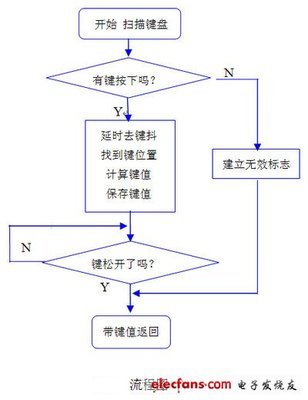

二维实剃刀矩阵M奇异值分解示意图。[Visualization of the SVD of a two-dimensional, real shearing matrixM.].

![奇异值分解[SingularValueDecomposition] singular value 矩阵](http://img.aihuau.com/images/02111102/02042626t015813016d2d37a915.jpg)

首先,我们看到蓝色的单位圆盘及两个规范的单位矢量。[First, we see the unitdisc in blue together with the two canonical unit vectors.]然后我们看到M的作用,它把一个圆扭曲为椭圆。[We then see the action of M, whichdistorts the disk to an ellipse.]SVD把M分解成三个简单的变换:旋转V*,沿着被旋转坐标轴的拉伸Σ以及第二个旋转U。[The SVD decomposesM into three simple transformations: a rotationV*, a scaling Σ along the rotated coordinate axesand a second rotation U.] 椭圆半轴σ1和σ2的长度是M的奇异值。[The lengthsσ1 and σ2 of the semi-axes of the ellipse are the singular values of M.]

在线性代数中,奇异值分解(SVD)是实或复矩阵的分解,它在信号处理和统计学中有许多有用的应用。[In linear algebra, the singular valuedecomposition (SVD) is a factorization of a real or complex matrix, with many useful applications insignal processing and statistics.]

形式上来说,m*n阶的实或复矩阵M的奇异值分解是形式如下的分解:[Formally, the singular valuedecomposition of an m×n real or complex matrix M is afactorization of the form]

其中,U是一个m*m阶的实或复单位阵,Σ是一个m*n阶的矩形对角阵,在对角线上有非负的实数值。V*(V的共轭转置)是一个n*n的实或复单位阵。Σ的对角项Σij称之为M的奇异值。U的m个列以及对应的V的n个列被分别称为M的左奇异矢量和右奇异矢量。[whereU is anΣ的对角项 m×m real or complex unitary matrix, Σ is an m×n rectangular diagonal matrix withnonnegative real numbers on the diagonal, and V* (theconjugate transpose of V) is ann×n real or complex unitary matrix. The diagonal entriesΣi,i of Σ are known as the singular values of M. The mcolumns of U and the n columns of V are calledthe left-singular vectors and right-singular vectorsof M, respectively.]

奇异值分解和特征值分解密切相关,即:[The singular value decomposition and theeigendecomposition are closely related.Namely:]

采用SVD的应用包括计算伪逆局阵、数据的最小平方拟合、矩阵逼近以及确定矩阵的秩、range以及nullspace等。[Applications which employ the SVD include computing thepseudoinverse, least squares fitting of data, matrixapproximation, and determining the rank, range and null space of a matrix.]

Contents |

Statement of the theorem

Suppose M is an m×n matrix whose entries come from thefield K, which is either the fieldof real numbers or the field of complex numbers. Then there exists afactorization of the form

where U is an m×m unitary matrix over K, the matrix Σ isan m×n diagonal matrix with nonnegative real numberson the diagonal, and the n×n unitary matrix V*denotes the conjugate transpose of V. Such afactorization is called the singular value decomposition ofM.

The diagonal entries of Σ are known as the singular values of M. A commonconvention is to list the singular values in descending order. Inthis case, the diagonal matrix Σ is uniquely determined by M(though the matrices U and V are not).

Intuitive interpretations

Rotation, scaling, rotation

In the special but common case in which M is just an m×msquare matrix with positive determinant whose entries are plain realnumbers, then U, V*, and Σ are m×m matrices of realnumbers as well, Σ can be regarded as a scaling matrix, and U and V* can be viewed asrotation matrices.

If the abovementioned conditions are met, the expression can thus be intuitively interpreted as a composition (or sequence) of three geometrical transformations: a rotation, a scaling, and another rotation. Forinstance, the figure above explains how a shear matrix can be described as such asequence.

Singular values as semiaxis of an ellipse orellipsoid

As shown in the figure, the singular values can be interpreted as thesemiaxes of an ellipse in 2D. This concept can be generalizedto n-dimensional Euclidean space, with the singular values ofany n×n square matrix being viewed as thesemiaxes of an n-dimensional ellipsoid. See below for further details.

The columns of U and V are orthonormalbases

Since U and V* are unitary, the columns of each ofthem form a set of orthonormal vectors, which can beregarded as basis vectors. By the definition ofunitary matrix, the same is true for their conjugate transposesU* and V. In short, U, U*, V,and V* are orthonormal bases.

Example

Consider the 4×5 matrix

A singular value decomposition of this matrix is given by

Notice is zero outside of the diagonal and one diagonal element is zero.Furthermore, because the matrices and are unitary, multiplying by their respectiveconjugate transposes yields identity matrices, as shown below. In thiscase, because and are real valued, they each are an orthogonal matrix.

and

This particular singular value decomposition is not unique.Choosing such that

is also a valid singular value decomposition.

Singular values, singular vectors, and their relation tothe SVD

A non-negative real number σ is a singular value for M if and only ifthere exist unit-length vectors u inKm and v inKn such that

The vectors u and v are calledleft-singular and right-singular vectors for σ,respectively.

In any singular value decomposition

the diagonal entries of Σ are equal to the singular values ofM. The columns of U and V are, respectively,left- and right-singular vectors for the corresponding singularvalues. Consequently, the above theorem implies that:

A singular value for which we can find two left (or right)singular vectors that are linearly independent is calleddegenerate.

Non-degenerate singular values always have unique left- andright-singular vectors, up to multiplication by a unit-phase factoreiφ (for the real case up to sign).Consequently, if all singular values of M are non-degenerateand non-zero, then its singular value decomposition is unique, upto multiplication of a column of U by a unit-phase factorand simultaneous multiplication of the corresponding column ofV by the same unit-phase factor.

Degenerate singular values, by definition, have non-uniquesingular vectors. Furthermore, if u1 andu2 are two left-singular vectors which bothcorrespond to the singular value σ, then any normalized linearcombination of the two vectors is also a left-singular vectorcorresponding to the singular value σ. The similar statement istrue for right-singular vectors. Consequently, if M hasdegenerate singular values, then its singular value decompositionis not unique.

Applications of the SVD

Pseudoinverse

The singular value decomposition can be used for computing thepseudoinverse of a matrix.Indeed, the pseudoinverse of the matrix M with singularvalue decomposition is

where Σ+ is the pseudoinverse of Σ, which is formedby replacing every nonzero diagonal entry by its reciprocal and transposing theresulting matrix. The pseudoinverse is one way to solve linear least squares problems.

Solving homogeneous linear equations

A set of homogeneous linear equations canbe written as for a matrix and vector . A typical situation is that is known and a non-zero is to be determined which satisfies the equation. Such an belongs to 's null space and is sometimes called a (right)null vector of . can be characterized as a right-singular vector corresponding to asingular value of that is zero. This observation means that if is a square matrix and has no vanishing singularvalue, the equation has no non-zero as a solution. It also means that if there are several vanishingsingular values, any linear combination of the correspondingright-singular vectors is a valid solution. Analogously to thedefinition of a (right) null vector, a non-zero satisfying , with denoting the conjugate transpose of , is called a left null vector of .

Total least squares minimization

A total least squares problem refersto determining the vector which minimizes the 2-norm of a vector under the constraint . The solution turns out to be the right-singular vector of corresponding to the smallest singular value.

Range, null space and rank

Another application of the SVD is that it provides an explicitrepresentation of the range and nullspace of a matrix M. The right-singular vectorscorresponding to vanishing singular values of M span thenull space of M. E.g., the null space is spanned by the lasttwo columns of in the above example. The left-singular vectors corresponding tothe non-zero singular values of M span the range ofM. As a consequence, the rank of M equals the number ofnon-zero singular values which is the same as the number ofnon-zero diagonal elements in .

In numerical linear algebra the singular values can be used todetermine the effective rank of a matrix, as rounding error may lead to small but non-zerosingular values in a rank deficient matrix.

Low-rank matrix approximation

Some practical applications need to solve the problem ofapproximating a matrix with another matrix , said truncated, which has a specific rank . In the case that the approximation is based on minimizing theFrobenius norm of the difference between and under the constraint that it turns out that the solution is given by the SVD of , namely

where is the same matrix as except that it contains only the largest singular values (the other singular values are replaced byzero). This is known as the Eckart–Young theorem, as it wasproved by those two authors in 1936 (although it was later found tohave been known to earlier authors; see Stewart 1993).

Also see CUR matrix approximation for anotherlow-rank approximation that is easier to interpret.

Separable models

The SVD can be thought of as decomposing a matrix into aweighted, ordered sum of separable matrices. By separable, we meanthat a matrix can be written as an outer product of two vectors , or, in coordinates, . Specifically, the matrix M can be decomposed as:

Here and are the ith columns of the corresponding SVDmatrices, are the ordered singular values, and each is separable. The SVD can be used to find the decomposition of animage processing filter into separable horizontal and verticalfilters. Note that the number of non-zero is exactly the rank of the matrix.

Separable models often arise in biological systems, and the SVDdecomposition is useful to analyze such systems. For example, somevisual area V1 simple cells' receptive fields can be welldescribed[1]by a Gabor filter in the space domain multiplied by amodulation function in the time domain. Thus, given a linear filterevaluated through, for example, reverse correlation, one canrearrange the two spatial dimensions into one dimension, thusyielding a two dimensional filter (space, time) which can bedecomposed through SVD. The first column of U in the SVDdecomposition is then a Gabor while the first column of Vrepresents the time modulation (or vice-versa). One may then definean index of separability, , which is the fraction of the power in the matrix M which isaccounted for by the first separable matrix in thedecomposition.[2]

Nearest orthogonal matrix

It is possible to use the SVD of to determine the orthogonal matrix closest to . The closeness of fit is measured by the Frobenius norm of . The solution is the product .[3]This intuitively makes sense because an orthogonal matrix wouldhave the decomposition where is the identity matrix, so that if then the product amounts to replacing the singular values with ones.

A similar problem, with interesting applications in shape analysis, is the orthogonal Procrustes problem,which consists of finding an orthogonal matrix which most closely maps to . Specifically,

where denotes the Frobenius norm.

This problem is equivalent to finding the nearest orthogonalmatrix to a given matrix .

The Kabsch algorithm

The Kabsch algorithm (called Wahba's problem in other fields) uses SVD tocompute the optimal rotation (with respect to least-squaresminimization) that will align a set of points with a correspondingset of points. It is used, among other applications, to compare thestructures of molecules.

Other examples

The SVD is also applied extensively to the study of linearinverse problems, and is useful in theanalysis of regularization methods such as that of Tikhonov. It is widely used instatistics where it is related to principal component analysis andto Correspondence analysis, and insignal processing and pattern recognition. It is also used inoutput-only modal analysis, where the non-scaledmode shapes can be determined from the singularvectors. Yet another usage is latent semantic indexing in naturallanguage text processing.

The SVD also plays a crucial role in the field of quantum information, in a form oftenreferred to as the Schmidt decomposition. Through it,states of two quantum systems are naturally decomposed, providing anecessary and sufficient condition for them to be entangled: if the rank of the matrix is larger than one.

One application of SVD to rather large matrices is in numerical weather prediction,where Lanczos methods are used to estimatethe most linearly quickly growing few perturbations to the centralnumerical weather prediction over a given initial forward timeperiod – i.e. the singular vectors corresponding to the largestsingular values of the linearized propagator for the global weatherover that time interval. The output singular vectors in this caseare entire weather systems. These perturbations are then runthrough the full nonlinear model to generate an ensemble forecast, giving a handle onsome of the uncertainty that should be allowed for around thecurrent central prediction.

Another application of SVD for daily life is that point inperspective view can be unprojected in a photo using the calculatedSVD matrix, this application leads to measuring length (a.k.a. thedistance of two unprojected points in perspective photo) by markingout the 4 corner points of known-size object in a single photo.PRuler is a demo to implement this application by taking a photo ofa regular credit card

Relation to eigenvalue decomposition

The singular value decomposition is very general in the sensethat it can be applied to any m × n matrix whereaseigenvalue decomposition canonly be applied to certain classes of square matrices.Nevertheless, the two decompositions are related.

Given an SVD of M, as described above, the following tworelations hold:

The right-hand sides of these relations describe the eigenvaluedecompositions of the left-hand sides. Consequently:

In the special case that M is a normal matrix, which by definition must besquare, the spectral theorem says that it can beunitarily diagonalized using a basis of eigenvectors, so that it can be written for a unitary matrix U and a diagonal matrix D. WhenM is also positive semi-definite, thedecomposition is also a singular value decomposition.

However, the eigenvalue decomposition and the singular valuedecomposition differ for all other matrices M: theeigenvalue decomposition is where U is not necessarily unitary and D is notnecessarily positive semi-definite, while the SVD is where Σ is a diagonal positive semi-definite, and Uand V are unitary matrices that are not necessarily relatedexcept through the matrix M.

Existence

An eigenvalue λ of a matrix is characterized by thealgebraic relation M u = λ u. When M isHermitian, a variational characterization isalso available. Let M be a real n × n symmetric matrix. Definef:Rn → Rby f(x) = xT M x. By the extreme value theorem, this continuousfunction attains a maximum at some u when restricted to theclosed unit sphere {||x|| ≤ 1}. By the Lagrange multipliers theorem, unecessarily satisfies

where the nabla symbol, , is the del operator.

A short calculation shows the above leads to M u = λu (symmetry of M is needed here). Therefore λ isthe largest eigenvalue of M. The same calculation performedon the orthogonal complement of u gives the next largesteigenvalue and so on. The complex Hermitian case is similar; theref(x) = x* M x is a real-valued function of2n real variables.

Singular values are similar in that they can be describedalgebraically or from variational principles. Although, unlike theeigenvalue case, Hermiticity, or symmetry, of M is no longerrequired.

This section gives these two arguments for existence of singularvalue decomposition.

Based on the spectral theorem

Let M be an m-by-n matrix with complexentries. M*M is positive semidefinite and Hermitian. By thespectral theorem, there exists a unitaryn-by-n matrix V such that

where D is diagonal and positive definite. PartitionV appropriately so we can write

Therefore V1*M*MV1 = D andV2*M*MV2 = 0. The latter meansMV2 = 0.

Also, since V is unitary,V1*V1 = I,V2*V2 = I andV1V1* +V2V2* = I.

Define

Then

We see that this is almost the desired result, except thatU1 and V1 are not unitary ingeneral, but merely isometries. To finish the argument, one simplyhas to "fill out" these matrices to obtain unitaries. For example,one can choose U2 such that

is unitary.

Define

where extra zero rows are added or removed to make thenumber of zero rows equal the number of columns ofU2. Then

which is the desired result:

Notice the argument could begin with diagonalizing MM*rather than M*M (This shows directly that MM* andM*M have the same non-zero eigenvalues).

Based on variational characterization

The singular values can also be characterized as the maxima ofuTMv, considered as a function of uand v, over particular subspaces. The singular vectors arethe values of u and v where these maxima areattained.

Let M denote an m × n matrix with realentries. Let and denote the sets of unit 2-norm vectors inRm and Rnrespectively. Define the function

for vectors u ∈ and v ∈ . Consider the function σ restricted to × . Since both and are compact sets, their product is also compact. Furthermore, sinceσ is continuous, it attains a largest value for at least onepair of vectors u ∈ and v ∈ . This largest value is denoted σ1 and thecorresponding vectors are denoted u1 andv1. Since is the largest value of it must be non-negative. If it were negative, changing the sign ofeither u1 or v1 would make itpositive and therefore larger.

Statement: u1, v1 areleft and right-singular vectors of M with correspondingsingular value σ1.

Proof: Similar to the eigenvalues case, by assumption thetwo vectors satisfy the Lagrange multiplier equation:

After some algebra, this becomes

and

Multiplying the first equation from left by and the second equation from left by and taking ||u|| = ||v|| = 1 into account gives

So σ1 = 2 λ1 = 2λ2. By properties of the functional φdefined by

we have

Similarly,

This proves the statement.

More singular vectors and singular values can be found bymaximizing σ(u, v) over normalized u,v which are orthogonal to u1 andv1, respectively.

The passage from real to complex is similar to the eigenvaluecase.

Geometric meaning

Because U and V are unitary, we know that thecolumns u1,...,um of Uyield an orthonormal basis ofKm and the columnsv1,...,vn of V yield anorthonormal basis of Kn (with respect tothe standard scalar products on these spaces).

The linear transformationT:Kn →Km that takes a vector x toMx has a particularly simple description with respect tothese orthonormal bases: we have T(vi) =σi ui, for i =1,...,min(m,n), where σi is thei-th diagonal entry of Σ, andT(vi) = 0 for i> min(m,n).

The geometric content of the SVD theorem can thus be summarizedas follows: for every linear mapT:Kn →Km one can find orthonormal bases ofKn and Km suchthat T maps the i-th basis vector ofKn to a non-negative multiple of thei-th basis vector of Km, and sendsthe left-over basis vectors to zero. With respect to these bases,the map T is therefore represented by a diagonal matrix withnon-negative real diagonal entries.

To get a more visual flavour of singular values and SVDdecomposition —at least when working on real vector spaces—consider the sphere S of radius one inRn. The linear map T maps thissphere onto an ellipsoid in Rm.Non-zero singular values are simply the lengths of the semi-axes of this ellipsoid. Especially whenn=m, and all the singular values are distinct andnon-zero, the SVD of the linear map T can be easily analysedas a succession of three consecutive moves:consider the ellipsoid T(S) and specifically itsaxes; then consider the directions inRn sent by T onto these axes. Thesedirections happen to be mutually orthogonal. Apply first anisometry v* sending these directions to the coordinate axesof Rn. On a second move, apply an endomorphism d diagonalized along thecoordinate axes and stretching or shrinking in each direction,using the semi-axes lengths of T(S) as stretchingcoefficients. The composition d o v*then sends the unit-sphere onto an ellipsoid isometric toT(S). To define the third and last move u,apply an isometry to this ellipsoid so as to carry it overT(S). As can be easily checked, the compositionu o d o v*coincides with T.

Calculating the SVD

Numerical Approach

The SVD of a matrix M is typically computed by a two-stepprocedure. In the first step, the matrix is reduced to a bidiagonal matrix. This takesO(mn2) floating-point operations (flops),assuming that m ≥ n (this formulation uses thebig O notation). The second step is to computethe SVD of the bidiagonal matrix. This step can only be done withan iterative method (as with eigenvalue algorithms). However, inpractice it suffices to compute the SVD up to a certain precision,like the machine epsilon. If this precision isconsidered constant, then the second step takes O(n)iterations, each costing O(n) flops. Thus, the first step ismore expensive, and the overall cost is O(mn2)flops (Trefethen& Bau III 1997, Lecture 31).

The first step can be done using Householder reflections for a cost of4mn2 − 4n3/3 flops, assumingthat only the singular values are needed and not the singularvectors. If m is much larger than n then it isadvantageous to first reduce the matrix M to a triangularmatrix with the QR decomposition and then use Householderreflections to further reduce the matrix to bidiagonal form; thecombined cost is 2mn2 + 2n3flops (Trefethen& Bau III 1997, Lecture 31).

The second step can be done by a variant of the QRalgorithm for the computation of eigenvalues, which was firstdescribed by Golub & Kahan (1965). The LAPACKsubroutine DBDSQR[4]implements this iterative method, with some modifications to coverthe case where the singular values are very small (Demmel& Kahan 1990). Together with a first step usingHouseholder reflections and, if appropriate, QR decomposition, thisforms the DGESVD[5]routine for the computation of the singular valuedecomposition.

The same algorithm is implemented in the GNU Scientific Library (GSL). The GSLalso offers an alternative method, which uses a one-sided Jacobiorthogonalization in step 2 (GSLTeam 2007). This method computes the SVD of the bidiagonalmatrix by solving a sequence of 2-by-2 SVD problems, similar to howthe Jacobi eigenvalue algorithmsolves a sequence of 2-by-2 eigenvalue methods (Golub& Van Loan 1996, §8.6.3). Yet another methodfor step 2 uses the idea of divide-and-conquereigenvalue algorithms (Trefethen& Bau III 1997, Lecture 31).

Analytic result of 2-by-2 SVD

The singular values of a 2-by-2 matrix can be foundanalytically. Let the matrix be where are complex numbers that parameterize the matrix, is the identity matrix, and denote the Pauli matrices. Then its two singularvalues are given by

Reduced SVDs

In applications it is quite unusual for the full SVD, includinga full unitary decomposition of the null-space of the matrix, to berequired. Instead, it is often sufficient (as well as faster, andmore economical for storage) to compute a reduced version of theSVD. The following can be distinguished for an m×nmatrix M of rank r:

Thin SVD

Only the n column vectors of U corresponding tothe row vectors of V* are calculated. The remaining columnvectors of U are not calculated. This is significantlyquicker and more economical than the full SVD ifn<<m. The matrixUn is thus m×n, Σn isn×n diagonal, and V is n×n.

The first stage in the calculation of a thin SVD will usually bea QR decomposition of M, which can makefor a significantly quicker calculation ifn<<m.

Compact SVD

Only the r column vectors of U and r rowvectors of V* corresponding to the non-zero singular valuesΣr are calculated. The remaining vectors of U andV* are not calculated. This is quicker and more economicalthan the thin SVD ifr<<n. The matrixUr is thus m×r, Σr isr×r diagonal, and Vr* isr×n.

Truncated SVD

Only the t column vectors of U and t rowvectors of V* corresponding to the t largest singularvalues Σt are calculated. The rest of the matrix isdiscarded. This can be much quicker and more economical than thecompact SVD if t<<r.The matrix Ut is thus m×t,Σt is t×t diagonal, andVt* is t×n'.

Of course the truncated SVD is no longer an exact decompositionof the original matrix M, but as discussed above, the approximate matrix is in a very useful sense the closest approximation to Mthat can be achieved by a matrix of rank t.

Norms

Ky Fan norms

The sum of the k largest singular values of M is amatrix norm, the Ky Fank-norm of M.

The first of the Ky Fan norms, the Ky Fan 1-norm is the same asthe operator norm of M as a linear operatorwith respect to the Euclidean norms of Kmand Kn. In other words, the Ky Fan 1-normis the operator norm induced by the standard l2Euclidean inner product. For this reason, it is also called theoperator 2-norm. One can easily verify the relationship between theKy Fan 1-norm and singular values. It is true in general, for abounded operator M on (possibly infinite dimensional)Hilbert spaces

But, in the matrix case, M*M½ is a normal matrix, so ||M* M||½is the largest eigenvalue of M* M½, i.e. thelargest singular value of M.

The last of the Ky Fan norms, the sum of all singular values, isthe trace norm (also known as the 'nuclear norm'),defined by ||M|| = Tr[(M*M)½] (theeigenvalues of M* M are the squares of the singularvalues).

Hilbert–Schmidt norm

The singular values are related to another norm on the space ofoperators. Consider the Hilbert–Schmidt inner producton the n × n matrices, defined by . So the induced norm is . Since trace is invariant under unitary equivalence, thisshows

where are the singular values of M. This is called theFrobenius norm, Schatten 2-norm, orHilbert–Schmidt norm of M. Direct calculation showsthat if

the Frobenius norm of M coincides with

Tensor SVD

Unfortunately, the problem of finding a low rank approximationto a tensor is ill-posed. In other words, there doesn't exist abest possible solution, but instead a sequence of better and betterapproximations that converge to infinitely large matrices. But inspite of this, there are several ways of attempting thisdecomposition. There exist two types of tensor decompositions whichgeneralise SVD to multi-way arrays. One decomposition decomposes atensor into a sum of rank-1 tensors, see Candecomp-PARAFAC(CP) algorithm. The CP algorithm should not be confused with arank-R decomposition but, for a given N, itdecomposes a tensor into a sum of N rank-1 tensors thatoptimally fit the original tensor. The second type of decompositioncomputes the orthonormal subspaces associated with the differentaxes or modes of a tensor (orthonormal row space, column space,fiber space, etc.). This decomposition is referred to in theliterature as the Tucker3/TuckerM, M-mode SVD,multilinear SVD and sometimes referred to as a higher-orderSVD (HOSVD). In addition, multilinearprincipal component analysis in multilinear subspace learninginvolves the same mathematical operations as Tucker decomposition,being used in a different context of dimensionality reduction.

Bounded operators on Hilbert spaces

The factorization can be extended to a bounded operator M on a separableHilbert space H. Namely, for any bounded operator M,there exist a partial isometry U, a unitaryV, a measure space (X,μ),and a non-negative measurable f such that

where is the multiplication by f onL2(X, μ).

This can be shown by mimicking the linear algebraic argument forthe matricial case above. VTf V* is the uniquepositive square root of M*M, as given by the Borel functional calculus forself adjoint operators. The reason whyU need not be unitary is because, unlike the finitedimensional case, given an isometry U1 withnontrivial kernel, a suitable U2 may not be foundsuch that

is a unitary operator.

As for matrices, the singular value factorization is equivalentto the polar decomposition for operators:we can simply write

and notice that U V* is still a partial isometry whileVTf V* is positive.

Singular values and compact operators

To extend notion of singular values and left/right-singularvectors to the operator case, one needs to restrict to compact operators. Itis a general fact that compact operators on Banach spaces have only discrete spectrum. Thisis also true for compact operators on Hilbert spaces, sinceHilbert spaces are a special case of Banachspaces. If T is compact, every nonzero λ in itsspectrum is an eigenvalue. Furthermore, a compact self adjointoperator can be diagonalized by its eigenvectors. If M iscompact, so is M*M. Applying the diagonalization result, theunitary image of its positive square root Tf hasa set of orthonormal eigenvectors {ei}corresponding to strictly positive eigenvalues{σi}. Foranyψ∈H,

where the series converges in the norm topology on H.Notice how this resembles the expression from the finitedimensional case. The σi 's are called thesingular values of M. {U ei} and {Vei} can be considered the left- and right-singularvectors of M respectively.

Compact operators on aHilbert space are the closure of finite-rank operators in the uniformoperator topology. The above series expression gives an explicitsuch representation. An immediate consequence of this is:

Theorem M is compact if and only if M*M iscompact.

History

The singular value decomposition was originally developed bydifferential geometers, who wished todetermine whether a real bilinear form could be made equal to another byindependent orthogonal transformations of the two spaces it actson. Eugenio Beltrami and Camille Jordan discovered independently, in1873 and 1874 respectively, that the singular values of thebilinear forms, represented as a matrix, form a complete set of invariants for bilinear forms underorthogonal substitutions. James Joseph Sylvester also arrived atthe singular value decomposition for real square matrices in 1889,apparently independent of both Beltrami and Jordan. Sylvestercalled the singular values the canonical multipliers of thematrix A. The fourth mathematician to discover the singularvalue decomposition independently is Autonne in 1915, who arrivedat it via the polar decomposition. The first proof ofthe singular value decomposition for rectangular and complexmatrices seems to be by CarlEckart and Gale Young in 1936;[6]they saw it as a generalization of the principal axis transformation for Hermitian matrices.

In 1907, Erhard Schmidt defined an analog ofsingular values for integral operators (which are compact,under some weak technical assumptions); it seems he was unaware ofthe parallel work on singular values of finite matrices. Thistheory was further developed by Émile Picard in 1910, who is the first to callthe numbers singular values (or rather, valeurssingulières).

Practical methods for computing the SVD date back to Kogbetliantz in 1954, 1955 and Hestenes in 1958.[7]resembling closely the Jacobi eigenvalue algorithm,which uses plane rotations or Givens rotations. However, these werereplaced by the method of Gene Golub and William Kahan published in 1965,[8]which uses Householder transformations orreflections. In 1970, Golub and Christian Reinsch[9]published a variant of the Golub/Kahan algorithm that is still theone most-used today.

See also

Notes

- ^ DeAngelis GC, Ohzawa I, Freeman RD(October 1995). "Receptive-fielddynamics in the central visual pathways". TrendsNeurosci. 18 (10): 451–8. doi:10.1016/0166-2236(95)94496-R.PMID8545912.

- ^ Depireux DA, Simon JZ, Klein DJ,Shamma SA (March 2001). "Spectro-temporal response field characterization with dynamicripples in ferret primary auditory cortex". J.Neurophysiol. 85 (3): 1220–34. PMID11247991.

- ^ The SingularValue Decomposition in Symmetric (Lowdin) Orthogonalization andData Compression

- ^ Netlib.org

- ^ Netlib.org

- ^ Eckart, C.; Young, G. (1936). "The approximationof one matrix by another of lower rank". Psychometrika 1 (3): 211–8. doi:10.1007/BF02288367.

- ^ Hestenes, M. R. (1958). "Inversion ofMatrices by Biorthogonalization and Related Results". Journal ofthe Society for Industrial and Applied Mathematics 6(1): 51–90. doi:10.1137/0106005. JSTOR2098862. MR0092215.

- ^ Golub, G. H.; Kahan, W. (1965). "Calculating the singularvalues and pseudo-inverse of a matrix". Journal of the Societyfor Industrial and Applied Mathematics: Series B, NumericalAnalysis 2 (2): 205–224. doi:10.1137/0702016. JSTOR2949777. MR0183105.

- ^ Golub, G. H.; Reinsch, C. (1970). "Singularvalue decomposition and least squares solutions". NumerischeMathematik 14 (5): 403–420. doi:10.1007/BF02163027.MR1553974.

References

External links

| This article's use of external links may not followWikipedia's policies or guidelines. Please improve this article by removing excessive or inappropriate external links, andconverting useful links where appropriate into footnote references.(January 2010) |

爱华网

爱华网