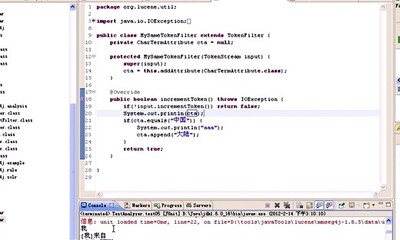

anlyzer包:import java.io.Reader;import java.io.StringReader;

import org.apache.lucene.analysis.Analyzer;import org.apache.lucene.analysis.SimpleAnalyzer;import org.apache.lucene.analysis.StopAnalyzer;import org.apache.lucene.analysis.StopFilter;import org.apache.lucene.analysis.Token;import org.apache.lucene.analysis.TokenStream;import org.apache.lucene.analysis.Tokenizer;import org.apache.lucene.analysis.WhitespaceAnalyzer;importorg.apache.lucene.analysis.standard.StandardAnalyzer;importorg.apache.lucene.analysis.tokenattributes.CharTermAttribute;import org.apache.lucene.util.Version;public class abc {private static String testString1 = "The quick brown foxjumped over the lazy dogs"; private static StringtestString2 = "xy&z mail is - xyz@sohu.com"; public static voidtestWhitespace(String testString) throws Exception{ Analyzer analyzer = newWhitespaceAnalyzer(Version.LUCENE_36); Reader r = new StringReader(testString); // Tokenizer ts = (Tokenizer)analyzer.tokenStream("", r); TokenStream ts=analyzer.tokenStream("", r);CharTermAttribute cab =ts.addAttribute(CharTermAttribute.class); System.err.println("=====Whitespaceanalyzer===="); System.err.println("分析方法:空格分割"); while (ts.incrementToken()) { System.out.print(cab.toString()+" ;"); }System.out.println(); } public static voidtestSimple(String testString) throws Exception{ Analyzer analyzer = newSimpleAnalyzer(Version.LUCENE_36); Reader r = new StringReader(testString); // Tokenizer ts = (Tokenizer)analyzer.tokenStream("", r); TokenStream ts =analyzer.tokenStream("",r);CharTermAttribute cab =ts.addAttribute(CharTermAttribute.class); System.err.println("=====Simpleanalyzer===="); System.err.println("分析方法:空格及各种符号分割"); while (ts.incrementToken()) { System.out.print(cab.toString()+" ;"); }System.out.println(); } public static voidtestStop(String testString) throws Exception{ Analyzer analyzer = newStopAnalyzer(Version.LUCENE_36); Reader r = new StringReader(testString); StopFilter sf = (StopFilter)analyzer.tokenStream("", r); System.err.println("=====stop analyzer===="); System.err.println("分析方法:空格及各种符号分割,去掉停止词,停止词包括is,are,in,on,the等无实际意义的词"); //停止词 CharTermAttribute cab =sf.addAttribute(CharTermAttribute.class); while (sf.incrementToken()) { System.out.print(cab.toString()+" ;"); }System.out.println(); } public static voidtestStandard(String testString) throws Exception{ Analyzer analyzer = newStandardAnalyzer(Version.LUCENE_36); Reader r = new StringReader(testString); StopFilter sf = (StopFilter)analyzer.tokenStream("", r); System.err.println("=====standardanalyzer===="); System.err.println("分析方法:混合分割,包括了去掉停止词,支持汉语"); CharTermAttribute cab =sf.addAttribute(CharTermAttribute.class); while (sf.incrementToken()) { System.out.print(cab.toString()+" ;"); }System.out.println(); } public static voidmain(String[] args) throws Exception { testWhitespace("i amlihan, i am a boy, i come from Beijing,我是来自北京的李晗"); testSimple("i am lihan,i am a boy, i come from Beijing,我是来自北京的李晗"); testStop("i am lihan, iam a boy, i come from Beijing,我是来自北京的李晗"); testStandard("i amlihan, i am a boy, i come from Beijing,我是来自北京的李晗"); }}

爱华网

爱华网