首先谈一下Socket机制本身,socket为各种协议提供了统一接口的一种ipc机制。在linux中,它由几个部分组成。为了讨论,先讨论几个数据结构,如下所示:

structnet_proto_family {

intfamily;

int(*create)(struct socket *sock, int protocol);

shortauthentication;

shortencryption;

shortencrypt_net;

structmodule*owner;

};

这个数据结构定义在linux的kernel中,在文件src/include/ linux/ net.h中。其中family是用来标示协议号的。而那个create函数指针则表示用来创建socket时所对应的create函数,owner则是这个协议的module结构。同时,还定义一个协议数:

#defineNPROTO64

再看一下socket的本身的定义:

structsocket {

socket_statestate;

unsignedlongflags;

struct proto_ops*ops;

structfasync_struct*fasync_list;

structfile*file;

structsock*sk;

wait_queue_head_twait;

shorttype;

};

Ops指针所对应的是在这个socket上的一些操作,它的定义如下:

structproto_ops {

intfamily;

structmodule*owner;

int(*release) (struct socket*sock);

int(*bind)(struct socket *sock,

struct sockaddr *myaddr,

int sockaddr_len);

int(*connect) (struct socket*sock,

struct sockaddr *vaddr,

int sockaddr_len, int flags);

int(*socketpair)(struct socket *sock1,

struct socket *sock2);

int(*accept)(struct socket *sock,

struct socket *newsock, int flags);

int(*getname) (struct socket*sock,

struct sockaddr *addr,

int *sockaddr_len, int peer);

unsigned int(*poll)(struct file *file, struct socket *sock,

struct poll_table_struct *wait);

int(*ioctl)(struct socket *sock, unsigned int cmd,

unsigned long arg);

int(*listen)(struct socket *sock, int len);

int(*shutdown) (struct socket *sock, intflags);

int(*setsockopt)(struct socket *sock, int level,

int optname, char __user *optval, int optlen);

int(*getsockopt)(struct socket *sock, int level,

int optname, char __user *optval, int __user *optlen);

int(*sendmsg) (struct kiocb *iocb,struct socket *sock,

struct msghdr *m, size_t total_len);

int(*recvmsg) (struct kiocb *iocb,struct socket *sock,

struct msghdr *m, size_t total_len,

int flags);

int(*mmap)(struct file *file, struct socket *sock,

struct vm_area_struct * vma);

ssize_t(*sendpage) (struct socket *sock, struct page*page,

int offset, size_t size, int flags);

};

从这个定义可以看出它定义了很多函数指针,也就是当生成某个协议的socket时,这个协议所对应的函数可以赋给这些函数指针。这样协议的实现者和socket本身的实现机制就可以分开。

在kernel中定义了一个静态的全局数组,如下所示:

staticstruct net_proto_family *net_families[NPROTO];这个定义在kernel的socket.c中。当linux系统启动时,系统的init进程会调用sock_init函数对这个数组初始化,在init进程中调用过程是:start_kernel –〉rest_init –〉kernel_thread(init, NULL, CLONE_FS |CLONE_SIGHAND)-〉init-〉do_basic_setup –〉sock_init :

for (i = 0; i < NPROTO; i++)

net_families[i] = NULL;

也就是每一个协议对应这个数组的一项。同时在这个socket.c文件中还定义了一些socket注册函数:

int sock_register(struct net_proto_family *ops)

{int err;

if (ops->family >= NPROTO) {printk(KERN_CRIT "protocol %d >= NPROTO(%d)n", ops->family, NPROTO);

return -ENOBUFS;

}

net_family_write_lock();

err = -EEXIST;

if (net_families[ops->family] == NULL) {net_families[ops->family]=ops;

err = 0;

}

net_family_write_unlock();

printk(KERN_INFO "NET: Registered protocol family %dn",

ops->family);

return err;

}

从这个代码可以看出,它最主要的工作就是在net_families数组所对应的项中把协议所对应的socket操作函数的net_proto_family结构指针给赋上值,这样当给定某个协议的socket时,就能通过协议号在这个net_families数组中找对应的项,进而可以得到这个socket的实际的创建函数,从而在需要生成一个新的这个协议的socket时调用用这个创建函数。那么这个socket注册函数是在哪调用的呢?一般是在协议初始化被调用的。如tipc协议在linux中是作为一个module来实现的,那么在module的

module_init(tipc_init);这个tipc_init调用关系如下:

tipc_init->start_core-〉start_core_base-〉socket_init-〉sock_register(&tipc_family_ops);

这个tipc_family_ops的定义如下:

static struct net_proto_family tipc_family_ops = {.owner = THIS_MODULE,

.family = AF_TIPC,

.create = tipc_create

};

AF_TIPC就是TIPC对应的协议标示,其值是30。而tipc_create函数就是tipc的socket的创建函数。

static int tipc_create(struct socket *sock, int protocol)

{struct tipc_sock *tsock;

struct tipc_port *port;

struct sock *sk;

u32 ref;

struct task_struct *tsk;

int size = (sizeof(tsock->comm) < sizeof(tsk->comm)) ?

sizeof(tsock->comm) : sizeof(tsk->comm);

if ((protocol < 0) || (protocol >= MAX_TIPC_STACKS)) { warn("Invalid protocol number : %d, permitted range 0 - %d.n",protocol, MAX_TIPC_STACKS);

return -EPROTONOSUPPORT;

}

if (protocol != 0) {int vres = handle_protocol(sock, protocol);

return vres;

}

ref = tipc_createport_raw(0, &dispatch, &wakeupdispatch,

TIPC_LOW_IMPORTANCE, 0);

if (unlikely(!ref))

return -ENOMEM;

sock->state = SS_UNCONNECTED;

switch (sock->type) {case SOCK_STREAM:

sock->ops = &stream_ops;

break;

case SOCK_SEQPACKET:

sock->ops = &packet_ops;

break;

case SOCK_DGRAM:

tipc_set_portunreliable(ref, 1);

case SOCK_RDM:

tipc_set_portunreturnable(ref, 1);

sock->ops = &msg_ops;

sock->state = SS_READY;

break;

default:

tipc_deleteport(ref);

return -EPROTOTYPE;

}

#if LINUX_VERSION_CODE >= KERNEL_VERSION(2,6,12)

sk = sk_alloc(AF_TIPC, GFP_KERNEL, &tipc_proto, 1);

#else

sk = sk_alloc(AF_TIPC, GFP_KERNEL, 1, tipc_cache);

#endif

if (!sk) {tipc_deleteport(ref);

return -ENOMEM;

}

sock_init_data(sock, sk);

init_waitqueue_head(sk->sk_sleep);

sk->sk_rcvtimeo = 8 * HZ;

tsock = tipc_sk(sk);

port = tipc_get_port(ref);

tsock->p = port;

port->usr_handle = tsock;

init_MUTEX(&tsock->sem);

memset(tsock->comm, 0, size);

tsk = current;

task_lock(tsk);

tsock->pid = tsk->pid;

memcpy(tsock->comm, tsk->comm, size);

task_unlock(tsk);

tsock->comm[size-1]=0;

tsock->overload_hwm = 0;

tsock->ovld_limit = tipc_persocket_overload;

dbg("sock_create: %xn",tsock);atomic_inc(&tipc_user_count);

return 0;

}

从这个函数的定义中可以看出,根据这个协议的不同的类型,如SOCK_STREAM还是SOCK_SEQPACKET,这给生成socket的ops指针赋予不同的操作类型,如下所示:

static struct proto_ops packet_ops = {.owner = THIS_MODULE,

.family = AF_TIPC,

.release = release,

.bind = bind,

.connect = connect,

.socketpair = no_skpair,

.accept = accept,

.getname = get_name,

.poll = poll,

.ioctl = ioctl,

.listen = listen,

.shutdown = shutdown,

.setsockopt = setsockopt,

.getsockopt = getsockopt,

.sendmsg = send_packet,

.recvmsg = recv_msg,

.mmap = no_mmap,

.sendpage = no_sendpage

};

static struct proto_ops stream_ops = {.owner = THIS_MODULE,

.family = AF_TIPC,

.release = release,

.bind = bind,

.connect = connect,

.socketpair = no_skpair,

.accept = accept,

.getname = get_name,

.poll = poll,

.ioctl = ioctl,

.listen = listen,

.shutdown = shutdown,

.setsockopt = setsockopt,

.getsockopt = getsockopt,

.sendmsg = send_stream,

.recvmsg = recv_stream,

.mmap = no_mmap,

.sendpage = no_sendpage

};

以上所讨论的都是linux内核当中的部分,但对于应用程序来说,是用socket编程时,并不是直接与这些内核当中的接口打交道的。由于应用程序运行在用户空间,这这些接口是需要在内核空间才可以调到。那么就有一个问题,应用程序是如何调用到这些接口的呢?其中的奥秘就在于glibc这个库。linux应用程序是调用glibc中的socket函数来编程的,在glibc中socket的函数只有一套,通过以上的这个机制它就可以对应各种协议的socket函数。那么glibc中是如何调用到内核中的函数的呢?

我们先来看一下内核socket.c这个文件,在这个文件中还定义了一个如下的函数:

#ifdef __ARCH_WANT_SYS_SOCKETCALL

#define AL(x) ((x) * sizeof(unsigned long))

static unsigned char nargs[18]={AL(0),AL(3),AL(3),AL(3),AL(2),AL(3),AL(3),AL(3),AL(4),AL(4),AL(4),AL(6),

AL(6),AL(2),AL(5),AL(5),AL(3),AL(3)};

#undef AL

asmlinkage long sys_socketcall(int call, unsigned long __user *args)

{unsigned long a[6];

unsigned long a0,a1;

int err;

if(call<1||call>SYS_RECVMSG)

return -EINVAL;

if (copy_from_user(a, args, nargs[call]))

return -EFAULT;

err = audit_socketcall(nargs[call]/sizeof(unsigned long), a);

if (err)

return err;

a0=a[0];

a1=a[1];

trace_socket_call(call, a0);

switch(call)

{case SYS_SOCKET:

err = sys_socket(a0,a1,a[2]);

break;

case SYS_BIND:

err = sys_bind(a0,(struct sockaddr __user *)a1, a[2]);

break;

case SYS_CONNECT:

err = sys_connect(a0, (struct sockaddr __user *)a1, a[2]);

break;

case SYS_LISTEN:

err = sys_listen(a0,a1);

break;

case SYS_ACCEPT:

err = sys_accept(a0,(struct sockaddr __user *)a1, (int __user *)a[2]);

break;

case SYS_GETSOCKNAME:

err = sys_getsockname(a0,(struct sockaddr __user *)a1, (int __user *)a[2]);

break;

case SYS_GETPEERNAME:

err = sys_getpeername(a0, (struct sockaddr __user *)a1, (int __user *)a[2]);

break;

case SYS_SOCKETPAIR:

err = sys_socketpair(a0,a1, a[2], (int __user *)a[3]);

break;

case SYS_SEND:

err = sys_send(a0, (void __user *)a1, a[2], a[3]);

break;

case SYS_SENDTO:

err = sys_sendto(a0,(void __user *)a1, a[2], a[3],

(struct sockaddr __user *)a[4], a[5]);

break;

case SYS_RECV:

err = sys_recv(a0, (void __user *)a1, a[2], a[3]);

break;

case SYS_RECVFROM:

err = sys_recvfrom(a0, (void __user *)a1, a[2], a[3],

(struct sockaddr __user *)a[4], (int __user *)a[5]);

break;

case SYS_SHUTDOWN:

err = sys_shutdown(a0,a1);

break;

case SYS_SETSOCKOPT:

err = sys_setsockopt(a0, a1, a[2], (char __user *)a[3], a[4]);

break;

case SYS_GETSOCKOPT:

err = sys_getsockopt(a0, a1, a[2], (char __user *)a[3], (int __user *)a[4]);

break;

case SYS_SENDMSG:

err = sys_sendmsg(a0, (struct msghdr __user *) a1, a[2]);

break;

case SYS_RECVMSG:

err = sys_recvmsg(a0, (struct msghdr __user *) a1, a[2]);

break;

default:

err = -EINVAL;

break;

}

return err;

}

#endif

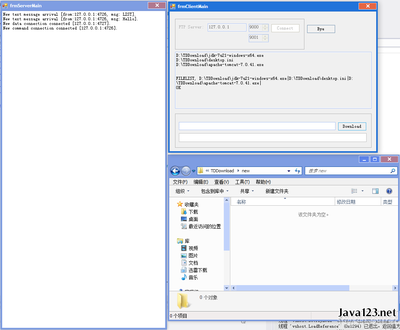

这个sys_socketcall是一个系统调用,所有的glibc中的socket 函数都是通过这个系统调用进入到内核空间的。我们来看accept的调用。Glibc中accept的调用在:sysdepsunixsysvlinuxaccept.S文件中:

#define socket accept

#define __socket __libc_accept

#define NARGS 3

#define NEED_CANCELLATION

#include <socket.S>

libc_hidden_def (accept)

这段与socket.S是accept()从用户态进入内核态的关键代码。accept.S中将accept定义为socket,__socket定义为__libc_accpet,NARGS定义为3,表示调用参数有3个。接下来包含了socket.S文件,如下:

#include <sysdep-cancel.h>

#include <socketcall.h>

#include <tls.h>

#define P(a, b) P2(a, b)

#define P2(a, b) a##b

.text

#ifndef __socket

# ifndef NO_WEAK_ALIAS

# define __socket P(__,socket)

# else

# define __socket socket

# endif

#endif

.globl __socket

cfi_startproc

ENTRY (__socket)

#if defined NEED_CANCELLATION && defined CENABLE

SINGLE_THREAD_P

jne 1f

#endif

movl �x, �x

cfi_register (3, 2)

movl $SYS_ify(socketcall), �x

movl $P(SOCKOP_,socket), �x

lea 4(%esp), �x

ENTER_KERNEL

movl �x, �x

cfi_restore (3)

cmpl $-125, �x

jae SYSCALL_ERROR_LABEL

L(pseudo_end):

ret

#if defined NEED_CANCELLATION && defined CENABLE

1: pushl %esi

cfi_adjust_cfa_offset(4)

CENABLE

movl �x, %esi

cfi_offset(6, -8)

movl �x, �x

cfi_register (3, 2)

movl $SYS_ify(socketcall), �x

movl $P(SOCKOP_,socket), �x

lea 8(%esp), �x

ENTER_KERNEL

movl �x, �x

cfi_restore (3)

xchgl %esi, �x

CDISABLE

movl %esi, �x

popl %esi

cfi_restore (6)

cfi_adjust_cfa_offset(-4)

cmpl $-125, �x

jae SYSCALL_ERROR_LABEL

ret

#endif

cfi_endproc

PSEUDO_END (__socket)

#ifndef NO_WEAK_ALIAS

weak_alias (__socket, socket)

#endif

在sysdepsunixsysvlinuxi386sysdep.h文件中

#undef SYS_ify

#define SYS_ify(syscall_name) __NR_##syscall_name

可以看到,通过SYS_ify(socketcall),我们得到了__NR_socketcall

在内核linux/include/asm/unistd.h中,定义了:

#define __NR_restart_syscall 0

#define __NR_exit 1

#define __NR_fork 2

#define __NR_read 3

… … …

… … …

#define __NR_socketcall 102

… … …

通过movl $SYS_ify(socketcall), �x我们可以看到,__NR_socketcall被定义为102,上面一行的代码即是将eax的值赋成102,即此系统调用的调用号。

下面我们看movl $P(SOCKOP_,socket), �x这一句。在socketcall.h中有相应的定义:

在glibc的sysdepsunixsysvlinuxsocketcall.h文件中,定于如下:

#define SOCKOP_socket 1

#define SOCKOP_bind 2

#define SOCKOP_connect 3

#define SOCKOP_listen 4

#define SOCKOP_accept 5

#define SOCKOP_getsockname 6

#define SOCKOP_getpeername 7

#define SOCKOP_socketpair 8

#define SOCKOP_send 9

#define SOCKOP_recv 10

#define SOCKOP_sendto 11

#define SOCKOP_recvfrom 12

#define SOCKOP_shutdown 13

#define SOCKOP_setsockopt 14

#define SOCKOP_getsockopt 15

#define SOCKOP_sendmsg 16

#define SOCKOP_recvmsg 17

那么这行代码的意思就是将相应的操作码赋予ebx,accept的操作码是5。在sysdepsunixsysvlinuxi386sysdep.h文件中,ENTER_KERNEL定义为:

#ifdef I386_USE_SYSENTER

# ifdef SHARED

# define ENTER_KERNEL call *%gs:SYSINFO_OFFSET

# else

# define ENTER_KERNEL call *_dl_sysinfo

# endif

#else

# define ENTER_KERNEL int $0x80

#endif

这就通过中断进入内核,linux/arch/i386/kernel/entry.S文件中:

… … …

# system call handler stub

ENTRY(system_call)

pushl �x # save orig_eax

SAVE_ALL

GET_THREAD_INFO(�p)

# system call tracing in operation / emulation

testw $(_TIF_SYSCALL_EMU|_TIF_SYSCALL_TRACE|_TIF_SECCOMP|_TIF_SYSCALL_AUDIT),TI_flags(�p)

jnz syscall_trace_entry

cmpl $(nr_syscalls), �x

jae syscall_badsys

syscall_call:

… … …

#ifdef CONFIG_DPA_ACCOUNTING

CHECK_DPA(�x,no_dpa_syscall_enter,dpa_syscall_enter)

#endif

call *sys_call_table(,�x,4)

movl �x,EAX(%esp) # store the return value

syscall_exit:

… … …

在linux/arch/i386/kernel/syscall_table.S文件中定义了sys_call_table,而socketcall系统调用在这个表中的定义就是102,这样传入eax的也是102,这样就调用到socketcall系统调用。通过上面sys_socketcall代码的分析,它基本就是一个socket分发函数。

这样当应用程序调用如下的一行代码产生一个tipc的socket时,其调用关系就是:

int sd = socket (AF_TIPC, SOCK_SEQPACKET,0);

glibc的socket汇编代码socket.S,系统调用sys_socketcall,进入内核调用sys_socket-〉sock_create-〉__sock_create-〉tipc_create,由于这个socket是SOCK_SEQPACKET类型,那么它的static struct proto_ops packet_ops = {.owner = THIS_MODULE,

.family = AF_TIPC,

.release = release,

.bind = bind,

.connect = connect,

.socketpair = no_skpair,

.accept = accept,

.getname = get_name,

.poll = poll,

.ioctl = ioctl,

.listen = listen,

.shutdown = shutdown,

.setsockopt = setsockopt,

.getsockopt = getsockopt,

.sendmsg = send_packet,

.recvmsg = recv_msg,

.mmap = no_mmap,

.sendpage = no_sendpage

};

这样当应用程序调用glibc的bind,recvmsg等,就会通过系统调用,进而调到这个tipc socket所对应的packet_ops 的函数。

爱华网

爱华网